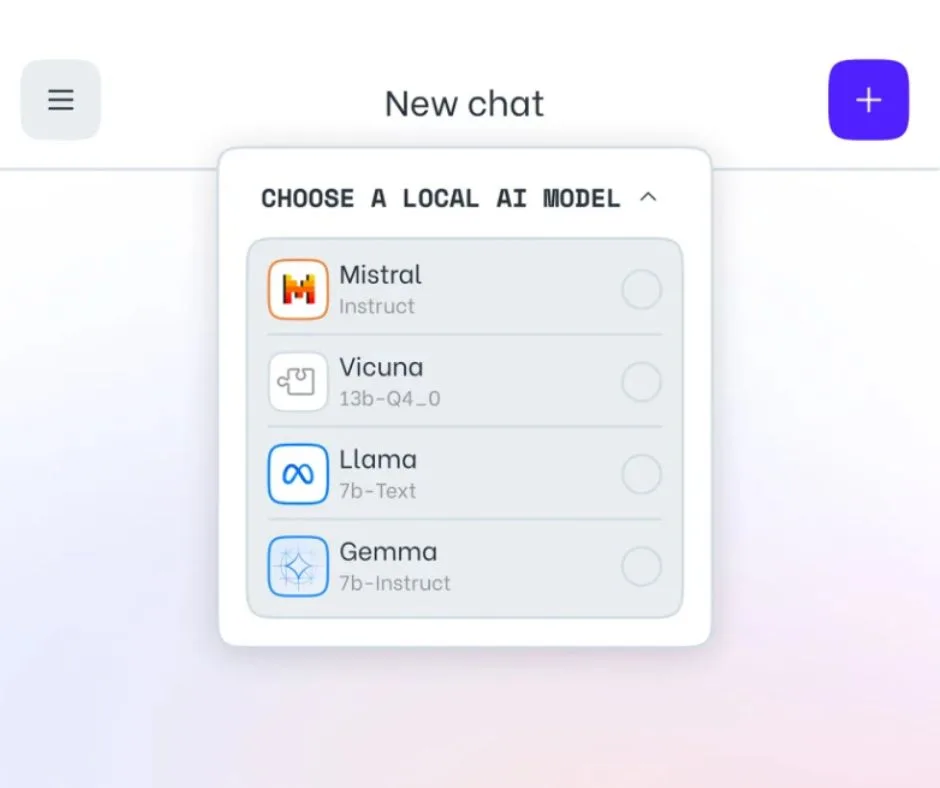

Opera has taken a significant leap forward by becoming the first browser to support local AI LLMs (Large Language Models), marking an unparalleled shift in the world of web browsing and AI integration. With this groundbreaking development, users can now enjoy the power of generative AI directly within their web browsers, without the need for cloud-based processing. Let’s delve into this revolutionary advancement and explore the potential impact it holds for web users worldwide.

What are Local AI LLMs?

Opera’s introduction of local LLMs signifies a substantial milestone in AI technology. These Large Language Models, powered by extensive text training, serve as the intelligent engines behind generative AI chatbots like ChatGPT and Gemini from Google. The implementation of local LLMs allows users to store AI models directly on their devices, eliminating the necessity to transmit data to the cloud. This not only promises enhanced privacy but also ensures that user interactions and prompts remain secure from external access or exploitation.

The new integration brings with it an array of features and over 150 models for users to choose from, offering a diverse selection of AI capabilities tailored to individual needs and preferences. This new functionality is an experimental step for Opera, opening the door to a world of possibilities for AI-powered web interactions. However, as with any cutting-edge technology, it is important to consider certain factors before fully embracing local LLMs in the Opera browser.

Considerations and Experimental Stage

As this innovative feature is still in the experimental stage, users must be mindful of potential bugs or performance inconsistencies. Furthermore, the integration of local LLMs may require a substantial amount of storage space, with some models exceeding 40GB. While larger models deliver more robust outputs, they also demand more time for downloading and processing. Additionally, the performance of the LLMs is contingent on the hardware setup, with older machines possibly experiencing longer response times. It is essential to keep in mind that this innovative feature is still in its beta phase and may exhibit occasional performance quirks.

Advantages of Local LLMs in Opera

The pursuit of local LLM integration within Opera presents numerous advantages, encapsulating privacy, personalization, and the democratization of AI capabilities. By enabling users to deploy locally stored AI models, Opera is empowering individuals to harness the potential of AI without relinquishing control over their personal data. This approach not only fosters privacy but also opens the gateway for seamless and tailored AI experiences, directly within the web browser environment. Moreover, with careful refinement and user feedback, this innovation paves the way for democratizing AI capabilities, making advanced AI functionalities more accessible to a broader audience.

Optimizing Local LLM Integration for Performance and Privacy

As users embrace Opera’s pioneering move into local LLM integration, attention to optimizing performance and safeguarding privacy becomes paramount. While some models may demand significant storage and processing power, a balanced approach to local LLM selection can ensure a harmonious blend of efficiency and capability. Furthermore, ensuring transparency and security in the handling of locally stored AI models is crucial for maintaining user trust and confidence in Opera’s commitment to privacy and data protection.

Opera’s pioneering foray into local LLM integration transcends the boundaries of web browsing, steering AI capabilities into an era of greater accessibility, personalization, and privacy-centric functionality. At the intersection of innovation and empowerment, this disruptive advancement holds the potential to redefine the landscape of AI utilization within web browsers, propelling users into an era of enriched digital experiences, securely at their fingertips.